BigWorld

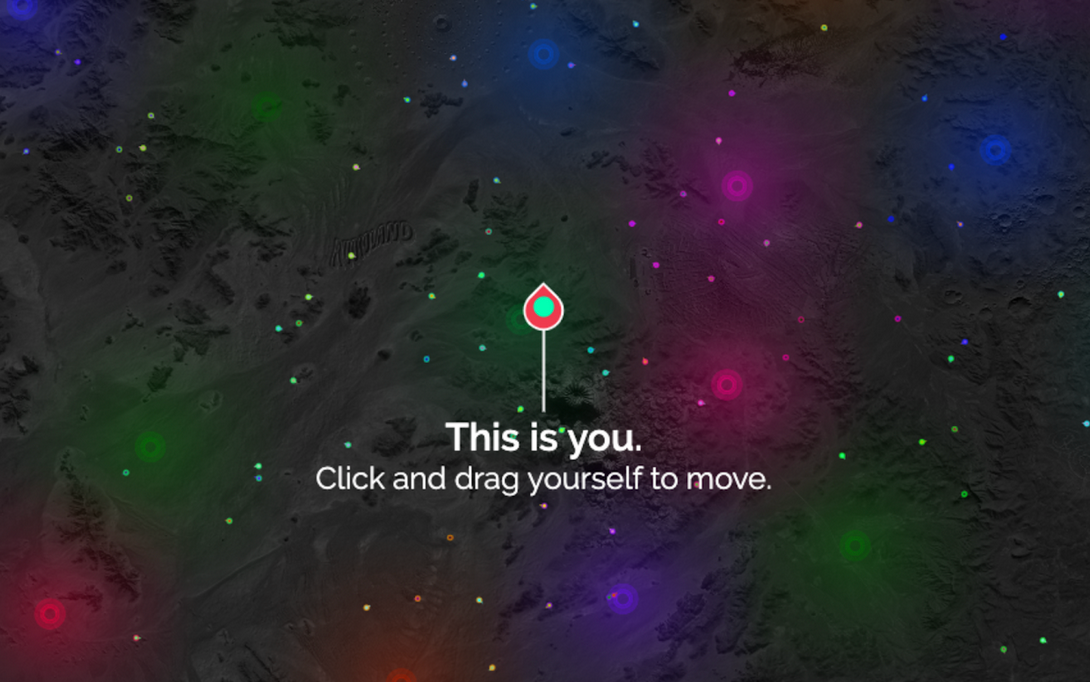

As our exploration of enterprise wound down, we started to think about what the minimal requirements are for someone to express themself in a virtual world. Our first requirement was spatial audio so we envisioned a world of audio. From afar it might look like dots of light across the globe.

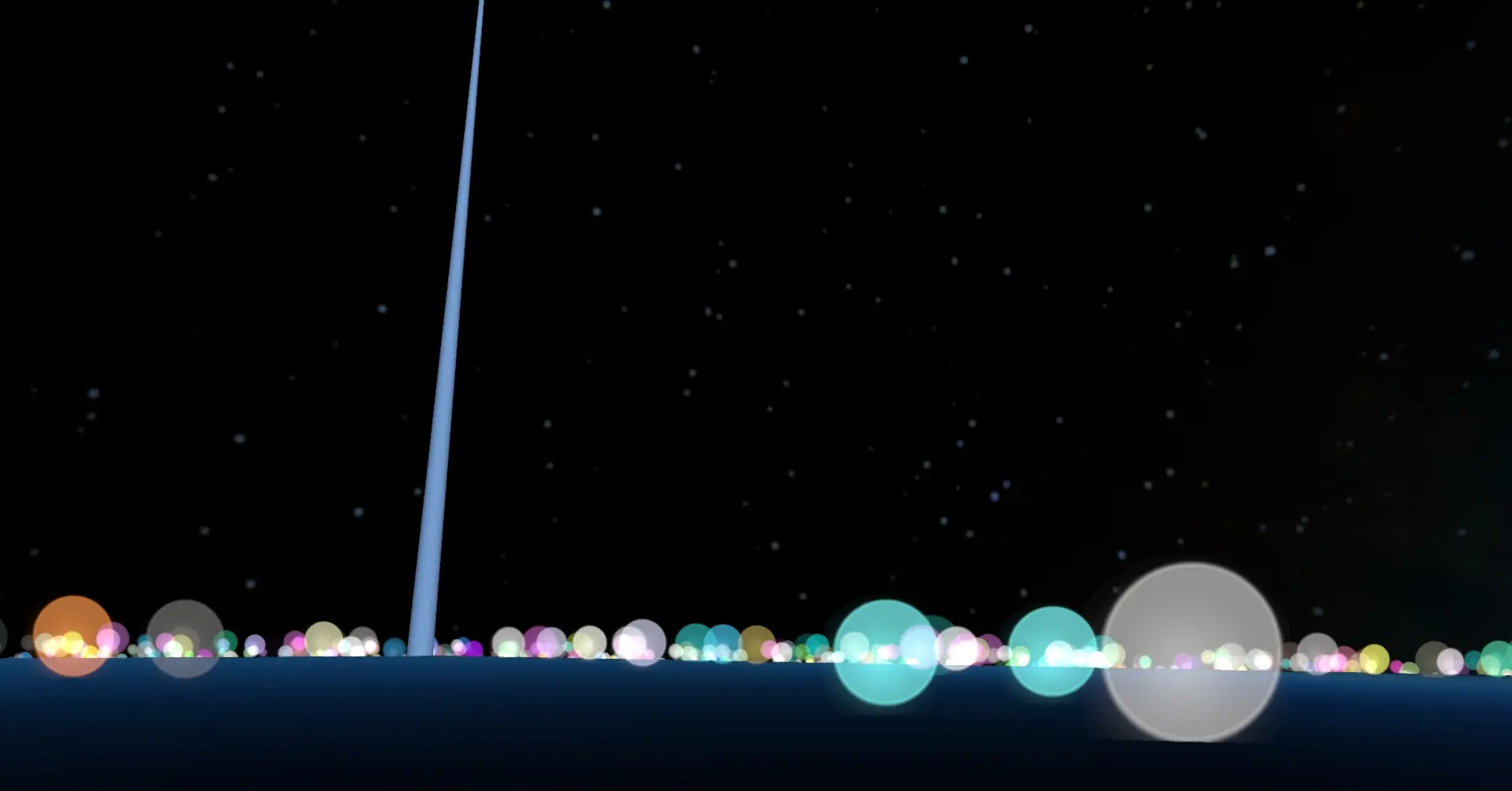

It might look like people congregating around audio mixers to chat.

What about avatars? Do they need eyes?

...or maybe just a sense of direction? That's where we landed with our last avatars in 3D.

With that, we shut down our 3D virtual world servers for good and began to think about spatial audio in a 2D world during the period of remote work and social distancing due to the COVID-19 pandemic.

I worked with one other engineer on initial prototypes where we were just dots around mixers on a 2D map. As we progressed, the UI became more intricate, using some serious math on HTML canvas elements to perfect movement and rotation of avatars while preserving map orientation.

In the following videos you can see how I implemented a follow feature so users could lead friends around the map while maintaining audio proximity. There was always a focus on accessibility and simple intuitive UI experiences. I implemented text-to-speech (TTS) and speech-to-text (STT) features to ensure the platform was accessible to as many users as possible.

I worked on some fun social features next including an early integration of GPT 3.0. I created a deck of cards

and experimented with separating stems for a unique music experience.

We hosted quite a few virtual concerts and you can still see some of the BigWorld 2D audio demo at highfidelity.com/spatial-audio-demos.